Tracking Terraform module versions across number of accounts

March 7th, 2024

Tracking software component versions is critical for maintaining the stability and predictability of your infrastructure. This is especially the case with a platform team producing common components [1] to be used by multiple application teams on their own infra.

This makes it easier to identify required changes and affected environments when new bugs or security vulnerabilities are discovered, and Terraform modules won’t differ here.

When a team delivers to Terraform module for other teams in an organization, thare are couple ideas that arise how the module versions could be tracked:

-

Version Control System: Crawl through the version control system (like Git) to collect what versions teams have specified in their stacks. While it provides a view into the declarative state stored in version control system, this one has some shortcomings:

-

Teams can have different models how they deploy their infrastructure. For quite a few the truth might be what’s included on the master branch, but others might use main, or production, or hotfix-prod-20240131-runc

-

It also might be that what’s included in the master branch is not what’s actually deployed. While strongly encouraged by the author, not everybody are buying into gitops, and it might be the latest changes that are going to be deployed during the next deployment window.

-

Terraform state files: Crawl through the Terraform remote state files stored e.g. on cloud object storage. Currently version information ain’t stored in the state however, so this won’t work.

-

Module Usage Logging: Modules report their version identifier when provisioned. The cleanest solution for this I’ve discovered is to store the module version upon terraform apply to a known location, from where the version identifiers can be crawled across multiple accounts.

There are multiple great places to store the information. In the following example a AWS parameter store, a key-value store-like service, is used.

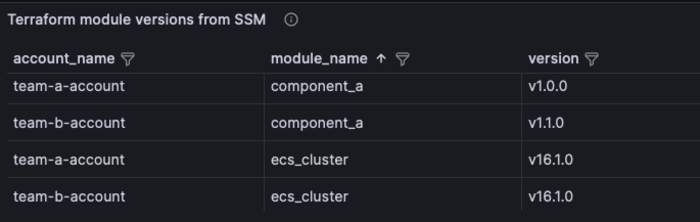

In the example below the module called ComponentA with version v1.0.0 is installed for the team A’s terraform. Another team B uses the same module ComponentA, but with a newer version v1.1.0.

// team A: main.tf

module "ComponentA" {

source = "git@github.com:hhamalai/ComponentA.git?ref=v1.0.0"

}

// team B: main.tf

module "ComponentA" {

source = "git@github.com:hhamalai/ComponentA.git?ref=v1.1.0"

}

The module ComponetA itself few extra resources used for version tracking // define all resources required by ComponentA resource "..." "..." { ... } ...

// random suffix allows the module being used multiple times on a single environment

resource "random_id" "suffix" {

byte_length = 8

}

resource "aws_ssm_parameter" "version" {

name = "/some-prefix/terraform-module-version/component_a/${random_id.suffix.hex}"

type = "String"

value = "v1.1.0" // This one is bumped with every new release, the same version identifier is used on git

}

The version identifier value is to be pumped on every released version. Now the version identifiers are added/updated on SSM whenever a team installs or updates a module using the version tracking scheme desribed. Teams can specify the pinned version with ?ref=v1.1.0 or just yolo and use the latest version, the specific version identifier is used from the terraform code, making installed versions trackable.

To query the version numbers across multiple accounts / accounts in the organization, some solution should crawl through the accounts, collecting these version numbers into some database-like storage, that can be used as a data source for queries and visualizations:

The exact and comprehensive visibility into your module usage provides insight into how your solutions are being used and helps to analyse the blastradius of changes, bugs and security issues in a multi-account organization.

1: Common components might be viable option when multiple teams are in charge of their own infra, but not everyone should be inventing their own infra from scratch. Things like compute runtimes, security monitoring, logging etc. can be packaged into components with a parameterized interface for customizations.